The New Economics of an AI-Powered SIEM

Breaking down traditional SIEM costs and how AI agents enable scalable security operations

Welcome to Detection at Scale—a weekly newsletter diving into SIEM, generative AI, cloud-centric security monitoring, and more. Enjoy! If you enjoy reading Detection at Scale, please share with your friends!

Key Points of today’s post:

Traditional SIEM economics create unsustainable cost trajectories as data volumes grow, forcing teams to choose between visibility and budget control.

A significant cost of security monitoring lies in human capital – from deployment and maintenance to triage and investigations.

AI fundamentally changes this equation by automating routine tasks and enabling more efficient resource utilization as organizations scale.

Security operations leaders face an unsustainable equation: traditional SIEM pricing models based on expensive ingest per GB create perverse incentives against comprehensive visibility. The modern attack surface generates massive amounts of security telemetry, forcing teams to make difficult tradeoffs between security coverage and cost control. While ingesting everything might seem ideal for compliance and investigation, the reality is more nuanced. High-volume data sources like cloud audit logs and network traffic can quickly drive costs into the millions, yet teams need this visibility for incident response and compliance requirements. Meanwhile, as the organizational cost of breaches continues to rise, the need for comprehensive monitoring grows stronger – yet security teams struggle to justify expanding SIEM budgets when the return on investment remains difficult to quantify.

Luckily, technological shifts are fundamentally reshaping SIEM economics through the combination of modern data lake architectures, intelligent AI agents, and new techniques for efficient data processing. Platforms that decouple storage from compute allow teams to leverage cost-effective cloud storage while performing the necessary pre-processing for future analysis. AI agents help teams extract more value from existing data through automated enrichment and correlation at machine speed. While AI introduces computational overhead, the efficiency gains in alert triage, detection engineering, and incident response can significantly offset human capital costs as organizations scale.

This post explores how combining AI and modern architectures creates new opportunities for sustainable SIEM operations. We'll examine how AI can reduce human capital costs through automated workflows, optimize technical resource consumption through intelligent processing, and help teams extract more value from their security data. By understanding these dynamics, security leaders can make informed decisions about where and how to apply AI for maximum economic benefit. Let's dive in.

The Human Cost

The most significant cost (and bottleneck) in security operations isn't software licensing or data storage – it's human capital. From deployment and maintenance to alert investigation and response, teams invest thousands of hours into operationalizing their SIEM. For a mid-sized enterprise, these costs often exceed $1M annually in salary alone before considering training, tooling, and operational overhead. These costs compound with team turnover – each departure means lost institutional knowledge and months of training investment for replacements. As organizations grow, these human capital costs tend to scale linearly with data volume and infrastructure complexity, creating an unsustainable economic model.

Deployment & Onboarding

SIEM deployment is a complex technical undertaking that demands expertise across cloud architecture, data engineering, and security operations. Even with SaaS deployments, teams must carefully design their logging architecture to balance coverage, performance, and cost. This includes log routing and centralization strategies, IAM configurations for secure access, and scaling considerations for high-volume sources.

A typical enterprise SIEM deployment requires 3-6 months of dedicated engineering time for initial setup. Many projects stall in this phase, consuming resources without delivering security value. The challenge compounds when teams must decide how to handle different data sources. Cloud infrastructure generates high volumes of data with critical security context, while SaaS applications present diverse formats. Traditional on-premise infrastructure, though legacy, remains necessary and must be integrated into the monitoring strategy.

Each data source also requires careful consideration based on security and compliance use cases. Each log source must also consider filtering, retention, and storage tiering to manage costs without sacrificing security visibility or violating compliance needs.

Alerting Learning Curves

The next phase of SIEM deployment is extracting valuable signals from the logs that teams invest time and money in onboarding. This starts with a monitoring strategy focused on operationalizing signal creation and building response playbooks to handle this signal. Success in these areas requires in-depth knowledge of how the SIEM declares detection and response logic.

SIEM platforms typically offer three main approaches to detection logic. Traditional programming languages leverage existing software engineering practices and enable version control, though they require platform-specific optimization knowledge. Domain-specific languages are optimized for security queries but can lock teams into vendor ecosystems and create knowledge transfer challenges. No-code platforms offer quick startup with pre-built content but limit customization and make testing more difficult. Each approach presents tradeoffs between ease of use, flexibility, and long-term maintainability.

Beyond language choice, teams must also understand platform-specific nuances around query performance optimization, data model relationships, alert deduplication strategies, and integration patterns with external tools and enrichment sources. Investing in learning these systems compounds as security teams scale and new use cases emerge. Organizations must balance the initial learning curve against long-term operational flexibility and the ability to adapt to evolving threats.

Operational Overhead

SIEM operations require constant attention across multiple layers, each demanding specialized expertise and ongoing maintenance to ensure reliable security monitoring.

Teams must continuously monitor parser health, update log configurations, and validate data quality. When cloud services update their log formats or add new fields, engineers must quickly modify parsers to prevent data loss or corruption.

Rule maintenance extends far beyond initial creation. Each rule becomes a mini service requiring monitoring, debugging, and periodic optimization. Detection engineers must handle edge cases gracefully, tune correlation windows, and update detection logic when dependent services change.

Teams invest significant effort in maintaining alert routing logic, updating integration points with ticketing systems, and ensuring notification channels remain operational and valuable to interface with the broader organization. Response playbooks must evolve alongside security control changes, new attack patterns, and team processes.

These operational demands create a compound effect - each new log source, detection rule, or integration adds to the maintenance burden. Without careful attention to operational efficiency, teams spend more time maintaining the system than using it for actual security monitoring.

Technical Cost Dynamics

The technical costs of security monitoring extend beyond infrastructure requirements. Each architectural decision—from log filtering to detection logic—has cascading effects on resource consumption and operational effectiveness. Understanding these dynamics becomes especially crucial, given how SIEM platforms approach licensing and billing. These technical costs can escalate rapidly as organizations grow – whether through business expansion or cloud adoption. Each new application, cloud service, or business unit typically brings its own set of security telemetry that must be collected, processed, and analyzed.

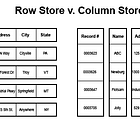

SIEM Licensing Models

Traditional SIEM pricing based on ingestion volume has created enduring challenges for security teams. While straightforward to understand – pay for what you ingest – this model often forces teams to make difficult tradeoffs between visibility and cost control. A large enterprise might generate terabytes of logs daily, but ingestion-based pricing makes comprehensive collection prohibitively expensive, particularly in SaaS models. Although unit prices have begun falling, it still adds friction in data onboarding for critical log sources, often resulting in custom workarounds.

Some vendors have attempted to address this through alternative models based on organizational metrics like employee count or endpoint numbers. While these approaches can provide more predictable costs, they often don't align with actual usage patterns or security needs. A small company with extensive cloud infrastructure might generate far more security telemetry than a larger organization with a simpler technology footprint.

Storage Economics

Storage decisions start with requirements: what data must remain quickly accessible for detection and response versus what can be archived for compliance or post-incident investigation. While the cloud has made storage relatively inexpensive, the actual cost emerges in data movement and transformation. Teams often default to keeping everything "hot" out of fear of missing crucial evidence, yet this approach rarely proves cost-effective in the longer term.

Modern SIEM architectures leverage cloud storage tiers to balance accessibility and cost. Critical security telemetry for active detection remains in hot storage, while compliance-focused logs move to cold storage after preprocessing. This preprocessing step is crucial – simply dumping raw logs into cold storage creates an enormous cost burden when that data needs to be analyzed during an incident. Teams must carefully consider their retention requirements and investigation patterns when designing these tiering strategies.

Compute Costs

Compute usage in modern SIEMs splits across two primary activities: signal creation and data analysis. Detection rules consuming compute resources must justify their cost through high-fidelity alerts that drive real security value. Poorly optimized rules that process unnecessary data or perform inefficient correlations waste resources without improving security posture.

Query optimization begins during log onboarding. Effective filtering and parsing not only reduce storage costs but also minimize the compute needed for analysis. Teams must balance the cost of enrichment and correlation against the operational benefits – adding context during ingestion may cost more upfront but can significantly reduce investigation time and compute needs during incidents.

The shift to data lake architectures has made these tradeoffs more explicit. While decoupled storage and compute offer more flexibility but require teams to think carefully about resource allocation. Each query, whether from a detection rule or analyst investigation, consumes resources directly impacting the bottom line. This creates an imperative to optimize not just for security effectiveness but also for computational efficiency.

The AI Efficiency Multiplier

AI agents will fundamentally reshape SIEM economics by addressing the core human capital challenges while introducing new efficiencies in technical operations. Rather than simply automating existing processes, these agents work alongside security teams to amplify their capabilities and reduce operational overhead.

Detection Engineering Democratization

AI will fundamentally change how teams create detections. Instead of requiring expertise in complex query languages or parsing specifications, teams can describe behaviors they want to detect in natural language. AI agents translate these requirements into optimized rules, handling the technical implementation details while ensuring performance and reliability. This democratizes detection engineering across the security team, regardless of coding expertise, and saves enormous amounts of time that otherwise would have resulted in several open tabs.

Investigation Acceleration

By maintaining context across incidents and understanding alerting patterns, AI agents streamline the analysis process. These agents automatically gather relevant context for alerts based on enrichments, eliminating the manual effort of correlating data across multiple sources. They learn from past resolved incidents to suggest investigation steps, helping analysts follow proven paths to resolution. They can identify meaningful connections between unrelated events through sophisticated pattern recognition, accelerating triage decisions and reducing investigation time. This intelligent assistance reduces the time analysts spend on routine tasks while improving the overall quality and consistency of investigations.

Accelerated Learning and Knowledge Transfer

One of AI's most powerful benefits will be assisting in preserving institutional knowledge. AI agents serve as always-on mentors and teammates who perfectly recall your environment's nuances, past incidents, and standard procedures. New analysts can ask questions in natural language and receive contextual guidance about investigation steps, relevant data sources, and historical patterns. This accelerates onboarding while preserving senior analyst bandwidth for complex investigations.

Proactive Operational Maintenance

Finally, AI offers perhaps its most significant economic benefit. AI agents can automatically analyze alert patterns to suggest rule improvements, identify redundant detections, and highlight opportunities for optimization. This shift from reactive to proactive maintenance reduces operational costs and improves detection quality while incorporating a platform's best practices.

Reshaping SIEM Economics

AI represents a fundamental shift in this economic equation. By automating routine tasks, preserving institutional knowledge, and enabling more efficient resource utilization, AI agents can help break the linear relationship between security coverage and cost. However, realizing these benefits requires thoughtful preparation. Teams should focus first on optimizing their data foundation through smart architecture decisions, efficient detection engineering, and documented processes. These fundamentals deliver immediate operational benefits and position organizations to leverage AI capabilities as they mature.

The future of security operations will belong to teams that successfully balance human expertise with machine efficiency. While AI introduces its own computational overhead, the potential for improved detection quality and operational scale makes it a crucial investment for modern security programs. As AI capabilities mature, we'll see a transformation in how analysts and AI agents work together – with machines handling routine tasks and analysis at scale. At the same time, humans focus on strategic decisions and novel threats. The key is starting this journey today with practical optimizations that set the stage for more intelligent security operations.