The Cursor Moment for Security Operations

How Model Context Protocol and AI coding agents are enabling the next evolution of detection engineering

Welcome to Detection at Scale—a weekly newsletter diving into SIEM, generative AI, cloud-centric security monitoring, and more. Enjoy! If you enjoy reading Detection at Scale, please share it with your friends!

Remember the first time you used Cursor? Instead of googling syntax or digging through documentation, you could simply describe what you wanted: "Add error handling to this script" or "Refactor this function to handle edge cases." The AI understood your intent, context, and constraints, then produced a solution.

The transformation from typing code to thinking in code is possible today in security operations. For years, security teams have been stuck in a manual workflow that feels antiquated: researching attacker techniques across scattered sources, learning platform-specific query languages, writing rules by hand, and then spending weeks tuning them based on feedback.

Protocols like Model Context Protocol (MCP) transform security operations by connecting AI directly to the data and tools that power your security program. Rather than spending hours on manual work, AI agents can now directly query your SIEM data, learn about your environment's baseline, and suggest detection logic tailored to your infrastructure, threat models, and patterns.

We are moving from "how do I write a rule to detect X?" to "what should I be detecting, and how can AI help me build comprehensive coverage?" The teams that embrace this will be operating at a new level of strategic thinking.

The Cursor moment for security operations has arrived. The teams that embrace AI-augmented detection engineering today will lead tomorrow's security operations.

The Current State: Manual Detection Engineering

Detection engineering today follows a predictable but labor-intensive workflow that hasn't fundamentally changed in over a decade. Understanding this process and its inherent limitations is crucial for appreciating why AI-augmented approaches represent such a significant leap forward.

The Traditional Detection Engineering Process

Research Phase (30-60 minutes): Analyzing MITRE ATT&CK techniques, recent threat intelligence, and security research to understand attack patterns and indicators

Data Discovery (30-45 minutes): Querying your SIEM to identify relevant log sources, understand field mappings, and assess data quality for the target technique

Baseline Analysis (45-90 minutes): Manually analyzing normal behavior patterns to establish thresholds and identify legitimate edge cases that shouldn't trigger alerts

Rule Implementation (30-60 minutes): Writing detection logic in your platform's specific query language, optimizing for performance, and handling data variations

Testing and Tuning (ongoing over days/weeks): Deploying to production, analyzing false positives, gathering analyst feedback, and iteratively refining the rule

Note: These time estimates reflect an experienced detection engineer working on straightforward rules in a well-understood environment. However, industry research shows a significant gap: 86% of security professionals report it takes a week or more for end-to-end detection development, with complex behavioral detections often requiring 2-4 weeks when accounting for thorough testing, tuning, and organizational processes.1

While individual steps seem manageable, the cumulative time investment adds up quickly. More importantly, each step requires specialized knowledge that creates bottlenecks and dependencies within security teams. The complexity multiplies when you consider the platform-specific nuances that detection engineers must master. Writing effective rules means understanding attackers and the nuances of your SIEM's query language, data model, and performance characteristics.

Consider building a rule to detect account compromise through unusual privileged access patterns: identify when users access systems or perform actions outside their normal behavior patterns. The implementation, however, requires a deep understanding of your authentication logs, user role structures, business processes, and legitimate administrative workflows. You must distinguish between a compromised account and a DevOps engineer working late to resolve a production issue.

This expertise bottleneck creates challenges for security teams. Detection engineers become critical dependencies for rule development and tuning. Knowledge about your environment's edge cases and business context often exists only in the minds of experienced team members. When these engineers leave, they take institutional knowledge that's difficult to replace or transfer to new team members. The result is detection coverage that's often suboptimal—either too broad, generating excessive false positives, or too narrow, missing sophisticated attacks that don't fit established patterns. Teams spend significant time maintaining existing rules rather than expanding coverage or adapting to new threats.

Emerging technologies are poised to transform this paradigm entirely.

Connecting Your SIEM with Leading AI Tools

Protocols like Model Context Protocol (MCP) represent a fundamental shift in how AI systems interface with enterprise data. Instead of copying and pasting log samples or describing your environment in prompts, MCP enables direct (and soon, more secure) connections between AI assistants and your security infrastructure.

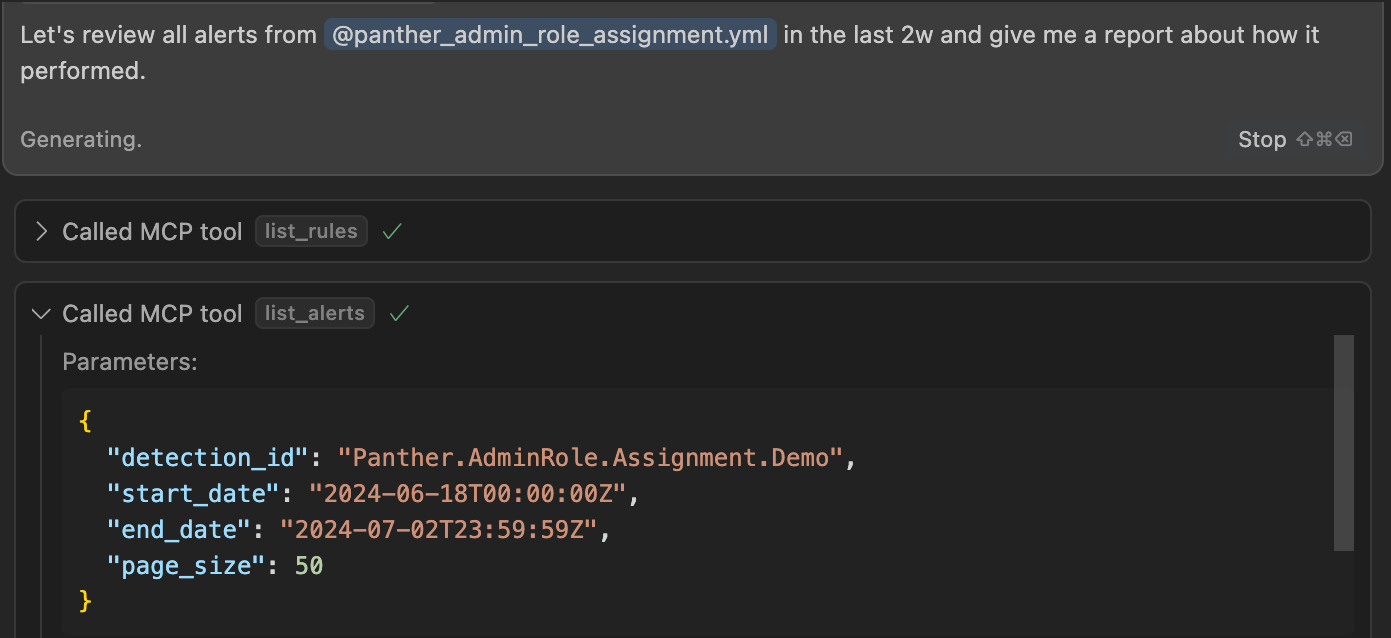

When you open Cursor, Claude, Goose, or your preferred AI assistant and ask, "What unusual authentication patterns have we seen in the past week?", AI assistants can query your actual Okta logs, analyze your specific user behavior patterns, and provide insights based on your real environment.

MCP works by wrapping SIEM API endpoints with tools (typically Python or JavaScript functions) that can execute read-only queries, retrieve log samples, access metadata about your data, and much more, depending on the server’s capabilities. The AI can perform tasks across the scope of your entire SIEM without requiring manual documentation or constant context switching.

Consider another example. Instead of manually researching AWS CloudTrail events and figuring out which fields indicate privilege escalation, you can ask: "Show me examples of privilege escalation attempts in aws_cloudtrail logs from the past month." The AI knows which tools can help answer this question, then writes a precise query for your CloudTrail logs and produces a report in whatever style you specify.

And once queries come back, we can create reports:

And even change designs to feel more… secure:

Note: When writing prompts for security analysis, use specific terms (like "look for privilege escalation events in the

aws_cloudtrailtable") instead of broader terms ("look for privilege escalation"). This helps avoid content filter triggers and reduces the chance of AI systems misinterpreting your intent.

Environment-Specific Detection Logic

Traditional SIEM rules use generic logic that needs customization for each environment. Through MCP, AI generates detection logic specifically tailored to your data sources, user behaviors, and infrastructure setup. It follows established conventions for setting thresholds, testing procedures, and alert formatting when suggesting new rules. This direct connection to your data unlocks sophisticated new capabilities:

Orchestrating Across Your Security Stack

One of the most powerful aspects of MCP in security contexts is its ability to orchestrate actions across different platforms within the same AI session. While your SIEM may already centralize logs from various sources, MCP enables the AI to reach beyond log analysis into operational workflows. The AI can query your SIEM for suspicious activity, automatically create incident reports in Notion, check the current on-call rotation in PagerDuty, and send contextual alerts to the appropriate Slack channels—all within a single conversation.

This cross-platform orchestration enables security workflows that would be extremely difficult to implement manually. Instead of switching between multiple tools and interfaces, the AI can execute complete incident response workflows while maintaining context throughout the process. The result is detection engineering that's more strategic, accurate, and aligned with your specific operational constraints.

The New Detection Engineering Workflow

The shift from manual rule implementation to AI-augmented detection engineering fundamentally changes how security teams approach their detection programs. Instead of focusing on individual rule writing, teams can now strategically address detection coverage while leveraging actual data from their environment.

This approach transforms detection engineering into a significantly more efficient workflow:

Document Business and Security Context: Create written documentation of your organization's security context, business processes, and operational patterns that the AI can reference. This includes details like: "Our development team deploys between 2-4 PM daily," "Service accounts with 'svc-' prefix perform automated tasks," and "Admin access outside business hours requires approval." This upfront investment pays dividends across all future detection development with only minor updates as needed.

Clearly Articulate Your Task: Clearly articulate the area you want to improve: "I want to audit our endpoint rules for excessive false positives," or "I need better detection coverage for cloud privilege escalation." This focused approach ensures the AI understands your strategic objectives rather than generating generic recommendations.

Analysis and Execution Plan: The AI then analyzes your relevant data sources, examines existing detection rules, and creates a comprehensive plan. For endpoint alert auditing, it might analyze your EDR alerts from the past month, identify patterns in false positives, and suggest specific rule modifications. The output may also suggest new rules based on real data.

Write, Test, and Stage: Then, have the AI write rules individually for your review and local testing. The AI can help generate test cases and validate that changes work as expected using available tools for your particular stack. Deploy the AI-suggested modifications to your staging environment, if available.

Promote and Maintain: Based on staging results, make final adjustments and promote successful changes to production. The AI maintains context throughout this process, learning from outcomes to improve future recommendations.

This workflow represents a 10x improvement over traditional detection engineering. Where teams previously spent weeks iterating on individual rules, they can now systematically analyze and improve entire categories of detections in days. The AI's ability to maintain context across your entire detection program enables strategic improvements that were previously impractical to implement at scale.

Time Savings and Multiplier Effects

The new AI-augmented workflow represents a fundamental leap in detection engineering efficiency and capability. The 10x improvement comes from the AI's ability to systematically analyze entire categories of detections rather than optimizing individual rules one at a time.

Strategic vs. Tactical Improvements

Traditional detection engineering focused on tactical improvements: writing better individual rules, reducing specific false positives, or covering particular attack techniques. The AI-augmented approach enables strategic improvements: comprehensively auditing all endpoint detections, systematically reducing false positive rates across your entire program, or building coordinated detection coverage for complete attack chains.

This shift from tactical to strategic optimization creates compound benefits. Instead of improving one rule that might fire a few times per week, you can optimize detection categories that affect hundreds of alerts monthly. The AI's analytical capabilities enable improvements and new coverage that save hours:

Quality and Consistency at Scale

AI-augmented detection engineering maintains consistent quality standards across your entire program. The AI applies lessons learned from one detection improvement to similar rules throughout your environment. When it identifies that certain service account patterns cause false positives in endpoint detection, it can systematically review and improve all related rules.

This consistency extends to coding standards, testing practices, and documentation quality. The AI maintains institutional knowledge that persists through team changes and ensures new detection development follows established patterns and best practices.

Democratization of Advanced Detection Engineering

The new workflow enables security analysts and domain experts to contribute directly to detection engineering without requiring deep SIEM expertise. A cloud security specialist can audit and improve cloud-focused detection rules by describing their knowledge of legitimate vs. suspicious activities, while the AI handles the technical implementation details.

This democratization multiplies your team's detection engineering capacity. Instead of being limited by the number of specialized detection engineers, you can leverage the security knowledge of your entire team to improve detection coverage and accuracy.

Embracing the Transformation

The transformation of detection engineering through AI assistance represents a fundamental evolution in how security teams build and maintain their defensive capabilities. We're moving from a world where detection development requires deep technical expertise in multiple domains to one where security knowledge, strategic thinking, and fluency in AI tooling become the primary skillsets.

Early adopters of AI-augmented detection engineering are already seeing significant advantages. They're building and scaling their detection programs without proportional increases in specialized headcount. These teams are shifting their focus from implementation mechanics to strategic threat analysis and program optimization. The key to successful adoption is starting with focused experiments and gradually expanding the scope as confidence builds. Begin with simple rule development, then eventually integrate research and multi-rule creation into your broader workflow.

AI accuracy is still not 100%, and may never be. However, newer reasoning frontier models like Claude 4, o3, and Gemini 2.5 pro continue pushing the boundaries of what’s possible. Adopting AI now will save you hundreds of collective hours every month, even if it occasionally makes mistakes, and you will become fluent in the proper prompting techniques to develop agents into trusted assistants.

The introduction of AI-native tooling into SecOps parallels what we've seen in software development with tools like Cursor. The developers who embraced AI assistance became faster at writing code, better at solving problems, and at building systems. The same evolution is now available to security teams willing to embrace AI-augmented detection engineering.

Continued Reading

https://www.anvilogic.com/report/2025-state-of-detection-engineering